Use Of Signal Detection Theory

Signal Detection Theory: Detection theory or signal detection theory is a means to measure the ability to differentiate between information-bearing patterns (called stimulus in living organisms, the signal in machines) and random patterns that distract from the information (called noise, consisting of background stimuli and random activity of the detection machine and of the nervous system of the operator). In the field of electronics, the separation of such patterns from a disguising background is referred to as signal recovery.

Signal detection theory (SDT)

It is a framework for interpreting data from experiments in which accuracy is measured. It was developed in a military context (see Signal Detection Theory, History of) then applied to sensory studies of auditory and visual detection, and is now widely used in cognitive science, diagnostic medicine, and many other fields. The key tenets of SDT are that the internal representations of stimulus events include variability and that perception (in an experiment, or in everyday life) incorporates a decision process. The theory characterizes both the representation and the decision rule.

According to the theory, there are a number of determiners of how a detecting system will detect a signal, and where its threshold levels will be. The theory can explain how changing the threshold will affect the ability to discern, often exposing how adapted the system is to the task, purpose, or goal at which it is aimed. When the detecting system is a human being, characteristics such as experience, expectations, physiological state (e.g., fatigue), and other factors can affect the threshold applied. For instance, a sentry in wartime might be likely to detect fainter stimuli than the same sentry in peacetime due to a lower criterion, however, they might also be more likely to treat innocuous stimuli as a threat.

Signal Detection Theory Psychology

Have you ever done that thing where you could swear you hear your phone ringing or feel it vibrating in your pocket, but then you go to check it and nobody was calling? Of course, you have. We all have. It’s a common occurrence, and there’s actually a scientific reason for it. No, it’s not that you’re going crazy, and yes, I am aware that this is the first explanation that goes through everyone’s mind.

How do we notice these stimuli? Why do we sometimes not notice them, and why do we detect them when they’re not really there?

The leading explanation: signal detection theory, which at its most basic, states that the detection of a stimulus depends on both the intensity of the stimulus and the physical/psychological state of the individual. Basically, we notice things based on how strong they are and on how much we’re paying attention. Want to learn more about this? Well, for this lesson to be intellectually stimulating, it looks like you’re going to have to pay attention.

Let’s start by looking at where the signal detection theory comes from.

From the beginning of the discipline, psychologists were interested in measuring our sensory sensitivity, how well we detect stimuli. The leading theory was that there was a threshold, a minimum value below which people could not detect a stimulus.

The only problem was that no firm threshold could be established. Some people heard a faint background noise easily, while others completely missed loud noises nearby. The results were simply too inconsistent for there to be a standard threshold. So, researchers started looking for a new explanation. What they found was that the sensory sensitivity was a relationship between the strength of the signal and the level of alertness, and thus, signal detection theory was born.

Which is best explained by signal detection theory?

The leading explanation: signal detection theory, which at its most basic, states that the detection of a stimulus depends on both the intensity of the stimulus and the physical/psychological state of the individual.

What are the four possible outcomes in signal detection theory?

Signal Detection Theory Psychology Definition

A psychological theory regarding a threshold of sensory detection.

One of the early goals of psychologists was to measure the sensitivity of our sensory systems. This activity led to the development of the idea of a threshold, the least intense amount of stimulation needed for a person to be able to see, hear, feel, or detect the stimulus. Unfortunately, one of the problems with this concept was that even though the level of stimulation remained constant, people were inconsistent in detecting the stimulus. Factors other than the sensitivity of sense receptors influence the signal detection process. There is no single, fixed value below which a person never detects the stimulus and above which the person always detects it. In general, psychologists typically define threshold as the intensity of stimulation that a person can detect some percentage of the time, for example, 50 percent of the time.

An approach to resolving this dilemma is provided by the signal detection theory.

This approach abandons the idea of a threshold. In other words, a person will be able to detect more intense sounds or lights more easily than less intense stimuli. Further, a more sensitive person requires less stimulus intensity than a less sensitive person would. Finally, when a person is quite uncertain as to whether the stimulus was present, the individual will decide based on what kind of mistake in judgment is worse: to say that no stimulus was present when there actually was one or to say that there was a stimulus when, in reality, there was none.

An example from everyday life illustrates this point.

Suppose a person is expecting an important visitor, someone that it would be unfortunate to miss. As time goes on, the person begins to “hear” the visitor and may open the door, only to find that nobody is there. This person is “detecting” a stimulus, or signal, that is not there because it would be worse to miss the person than to check to see if the individual is there, only to find that the visitor has not yet arrived.

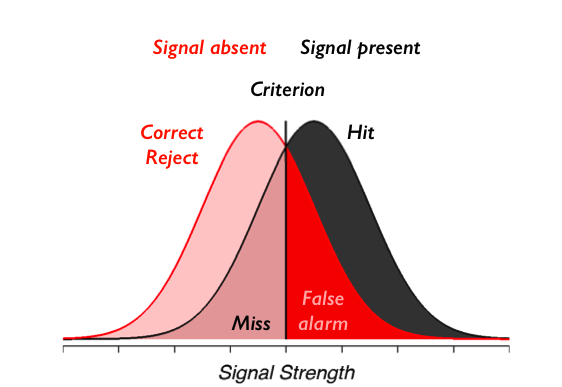

In a typical sensory experiment that involves a large number of trials, an observer must try to detect a very faint sound or light that varies in intensity from clearly below normal detection levels to clearly above. The person responds positively (i.e., there is a stimulus) or negatively (i.e., there is no stimulus). There are two possible responses, “Yes” and “No.” There are also two different possibilities for the stimulus, either present or absent. The accompanying table describes the combination of an observer’s response and whether the stimulus is actually there. The table refers to a task with an auditory stimulus, but it could be modified to involve stimuli for any sense.

Psychologists have established that when stimuli are difficult to detect, cognitive factors are critical in the decision an observer makes.

If a person participates in an experiment and receives one dollar for each Hit and there is no penalty for a False Alarm. It is in the person’s best interest to say that the stimulus was present whenever there is uncertainty.

On the other hand, if the person loses two dollars for each False Alarm, then it is better for the observer to be cautious in saying that a stimulus occurred. This combination of rewards and penalties for correct and incorrect decisions is referred to as the Payoff Matrix. If the Payoff Matrix changes, then the person’s pattern of responses will also change. This alteration in responses is called a criterion shift.

There is always a trade-off between the number of Hits and False Alarms. When a person is very willing to say that the signal was present, that individual will show

SIGNAL DETECTION THEORY |

|

| Status of Stimulus | Observer’s Decision |

| Stimulus is present | Yes, there is a sound. This is termed a HIT because the sound is there and the observer detects it. |

| No, there is no sound. This is termed a MISS because the sound is there, but the observer fails to detect it. |

|

| Stimulus is absent | Yes, there is a sound. This is termed a FALSE ALARM because the sound is present, but the observer fails to detect it. |

| No, there is no sound. This is termed CORRECT REJECTION, because the sound is not there, and the observer correctly notes its absence. |

|

more Hits, but will also have more False Alarms. Fewer Hits will be associated with fewer False Alarms. As such, the number of Hits is not a very revealing indicator of how sensitive a person is; if the person claims to have heard the stimulus on every single trial, then the person will have said “Yes” in every instance in which the stimulus was actually there. This is not very impressive, however, because the person will also have said “Yes” on every trial on which there was no stimulus. Psychologists have used mathematical approaches to determine the sensitivity of an individual for any given pattern of Hits and False Alarms; this index of sensitivity is called d’ (called d-prime). A large value of d’ reflects greater sensitivity.

The basic idea behind signal detection theory is that neurons are constantly sending information to the brain, even when no stimuli are present. This is called neural noise. The level of neural noise fluctuates constantly. When a faint stimulus, or signal, occurs, it creates a neural response. The brain must decide whether the neural activity reflects noise alone, or whether there was also a signal.

Signal Detection Theory Definition

I begin here with a medical scenario. Imagine that a radiologist is examining a CT scan, looking for evidence of a tumor. Interpreting CT images is hard and it takes a lot of training. Because the task is so hard, there is always some uncertainty as to what is there or not. Either there is a tumor (signal present) or there is not (signal absent). Either the doctor sees a tumor (they respond “yes”) or does not (they respond “no”). There are four possible outcomes: hit (tumor present and doctor says “yes”), miss (tumor present and doctor says “no”), false alarm (tumor absent and doctor says “yes”), and correct rejection (tumor absent and doctor says “no”). Hits and correct rejections are good. False alarms and misses are bad.

There are two main components to the decision-making process: information acquisition and criterion.

Information acquisition: First, there is information in the CT scan. For example, healthy lungs have a characteristic shape. The presence of a tumor might distort that shape. Tumors may have different image characteristics: brighter or darker, different texture, etc. With proper training, a doctor learns what kinds of things to look for. So with more practice/training they will be able to acquire more (and more reliable) information. Running another test (e.g., MRI) can also be used to acquire more information. Regardless, acquiring more information is good. The effect of information is to increase the likelihood of getting either a hit or a correct rejection while reducing the likelihood of an outcome in the two error boxes.

Criterion:

The second component of the decision process is quite different. For, in addition to relying on technology/testing to provide information, the medical profession allows doctors to use their own judgment. Different doctors may feel that the different types of errors are not equal. For example, some doctors may feel that missing an opportunity for early diagnosis may mean the difference between life and death. A false alarm, on the other hand, may result only in a routine biopsy operation.

They may choose to err toward “yes” (tumor present) decisions. Other doctors, however, may feel that unnecessary surgeries (even routine ones) are very bad (expensive, stressful, etc.). They may choose to be more conservative and say “no” (no tumor) more often. They will miss more tumors, but they will be doing their part to reduce unnecessary surgeries. And they may feel that a tumor if there really is one, will be picked up at the next check-up. These arguments are not about information. Two doctors, with equally good training, looking at the same CT scan, will have the same information. But they may have a different bias/criterion.

What Is Signal Detection Theory

Many individuals and organizations make predictions of future events or detection and identification of current states. Examples include stock analysts, weather forecasters, physicians, and, of course, intelligence analysts. Those making the predictions need to know how well they are doing and if they are improving. Being able to assess prediction accuracy is especially important when evaluating new methods believed to improve forecast performance.

Read Also: The Remainder Theorem

However, in a problem that is not unique to the intelligence community (IC), forecasters are notoriously reluctant to keep scorecards of their performance, or at least to make those score-cards publicly available. As discussed extensively in Tetlock and Mellers (this volume, Chapter 11), Intelligence Community Directive Number 203 (Director of National Intelligence, 2007) emphasizes process accountability in evaluating IC performance rather than accuracy.

This chapter suggests methods for improving the assessment of accuracy.

The chapter relies extensively on recent advances in assessing medical forecasts and detections. Where signal detection theory specifically and evidence-based medicine more generally has led to many advances. Although medical judgment tasks are not perfectly analogous to IC analyses. There are enough strong similarities to make examples useful. Just as physicians often have to make quick assessments based on limited and sometimes conflicting information sources with no two cases ever being quite the same, so too intelligence analysts evaluate and characterize evolving situations using partial information from sources varying in credibility.